Not really, I've been pondering the possibility of moving to a cabin in the woods, playing video games on carts, switching to a flip phone… or maybe Linux …and then growing lots of vegetables. Heh, but then I realize that lots of food is heavy and it would be nice to have robots carry that.

It's more about seeing Tumult… DO SOMETHING!

There's certainly the potential for Hype to catch up. I think a great comparison is Pixelmator. That app has templates, it imports Photoshop files, it already has some AI features (such as removing the background), and it's updated constantly. The app has to be good, because they have to fend off the competition like Affinity Photo and open source image editors.

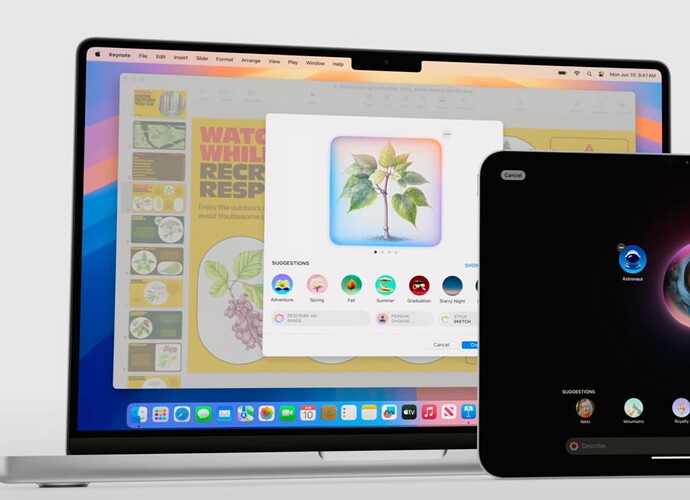

It sounds like it's a little bit of programming to add the “Image Playground API”. It's not perfect, but it's moving forward with Apple. That's what could get new energy around here, as that seems like something Apple would feature.

That alone is not enough for a 5.0 release though.

(Although, Chrome is around 127 and Firefox is around 128, so maybe lots of little updates is more important.  )

)

But, if improved code editing was added to Whisk and Hype simultaneously… wow! I don't like that I use a code editor from Microsoft. But unfortunately, Whisk doesn't clean up code like Visual Studio Code, Whisk doesn't have code completion or Emmet Abbreviation, tab doesn't add a predetermined (five) amount of spaces, it doesn't spell check code, no git, no coloring of brackets, and some other stuff. Probably the biggest feature is cross platform support. Visual Studio Code works on Linux.

So, if Hype is to go the code assistant route, it seems like they'd need to strengthen the editor first.

And if I seem excited, it because I'm more optimistic about Apple's future. WWDC didn't wow… beside Math Notes …but what I did like was the humanization of AI. It wasn't showing off how your robot looks like something from the Terminator movie, or how AI is going to kill all the jobs. It was like… oh look, cute emoji.

Oh, but they “Sherlocked” lots of companies… so I suppose it depends on your perspective. Automation is great until it comes for your job.